The edge computing term has become one of the next big buzz words in the IT world. The concept of edge computing is not new and has been around for a while. In fact, almost every organization probably already has one or more use cases that would fall into the bucket of edge computing.

Edge computing is a broad term to describe what’s essentially a computer that is physically located near the action. The edge device could be a robot, a point of sale system, or a machine learning/artificial intelligence system. Edge devices are typically some of the most mission-critical and regulatory burdensome in your entire organization. Without them automakers can’t manufacture their cars, restaurants can’t sell their sandwiches, and hospitals can’t treat their patients. These same functions cause these systems to be in-scope for regulations PCI, HIPPA, NERC-CIP, etc.

By definition, edge devices live out in the world outside of the physical control of centralized IT teams. This exposes them to additional constraints like low-bandwidth, high latency, low resources, extreme scale, without the luxury of local personal or physical controls IT operators expect from a Data Center/Cloud.

How do teams then efficiently manage these devices? They’re still a computer running an operating system that needs to be configured, patched, updated, health checked and audited.

Sending technicians out with USB drives is not efficient nor does it meet the efficiency or speed demands of your business. How can your company provide the best customer experience if your competitors can deliver updates in new offerings in real-time, while you’re mailing USB drives & scheduling technicians?

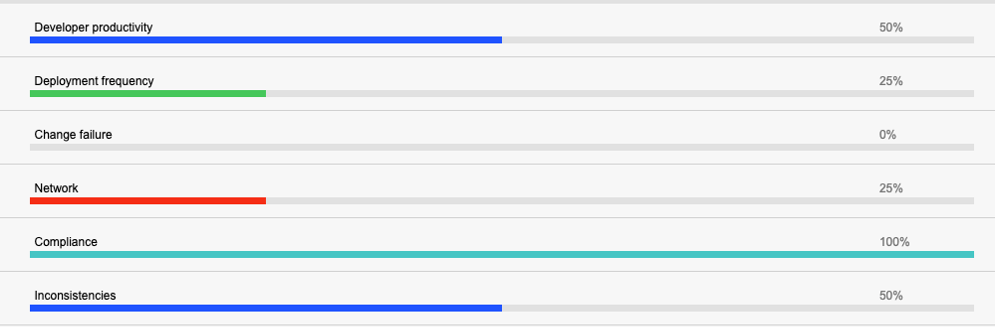

During the webinar “Fast & Reliable Edge Computing Automation with Chef”, users affirmed this by citing compliance as the biggest challenge they were dealing with when it comes to supporting edge computing devices.

An all too familiar scenario

All of these challenges lead to an unfortunate and all too familiar scenario for the operations teams tasked with supporting edge implementations.

It’s after hours…

- An application update has to be rolled out on 10,000 systems

- Many of the systems have different specs (model number, config, build, OS, application version) and it’s unknown if the update will work on all the system variations

- The dev team has spent months testing in the “lab” but past deployments have shown the current lab is not adequately simulating the constraints & complexities of production

- The release team is anxious about the update, but they deploy anyway – and wait to hear from the ops team on how it went

- Tomorrow during standard hours the ops team reports, 9,000 systems were updated, 500 were missed, 200 are down, and 300 are unknown

And the business tells you they want to do this all more frequently!

This is the typical scenario that we see with customers. They have not been able to effectively implement an automated approach to application delivery for their edge computing devices.

This happens for a number of reasons like homegrown tools, inconsistent delivery processes that don’t scale, hard to maintain, with automation tools that can’t work in low-bandwidth/high latency scenarios. Lack of coordination causes ongoing configuration drift between development and production environments.

Solving the problem

Addressing these challenges requires development and operation teams to closely work together and implement a common set of best practices.

Edge computing automated delivery guidelines:

- Implement an autonomous automation solution – Solution needs to be able to work in low-bandwidth environments, have the ability to self-heal, rollback, and scale easily

- Adopt an automation “as code” approach – DevOps practices like infrastructure as code and compliance as code are required for success on the edge

- Validate delivery status in “near” real-time – Comprehensive dashboards and the ability to quickly and easily visualize

- Continuous Compliance – Verify your compliance posture at any moment & generate evidence with the click of a button.

Chef enables DevOps teams supporting edge computing devices to eliminate deployment failures and accelerate release schedules by providing a code-based autonomous automation solution that includes configuration, hardening, patch management, compliance audits and application delivery. By using Chef edge solutions clients can:

- Scale continuous delivery to the edge and deploy applications 90% faster

- Enable compliance as code and security checks for things like HIPAA and PCI compliance

- Validate deployments in real-time and eliminate 95%+ of run-time failures.

For more on how Chef helps DevOps teams streamline operational support for edge computing scenarios watch the short overview video “Chef Enterprise Automation Stack Applied to the Edge”

Customers like Walmart have chosen Chef to help them improve their delivery of edge solutions. To speak to a Chef representative on how we may be able to help you optimize an existing or planned edge implementation you can contact us here.